搜索到

62

篇与

的结果

-

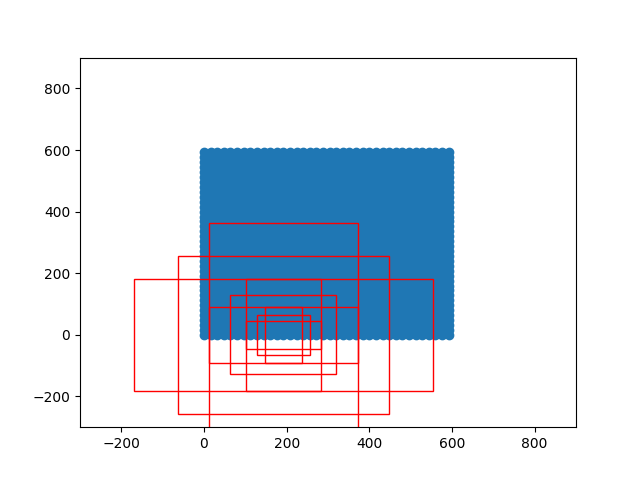

fastrcnn网络结构复现 1.backbone-restnet50import math import torch.nn as nn class Bottleneck(nn.Module): expansion = 4 def __init__(self, inplanes, planes, stride=1, downsample=None): super(Bottleneck, self).__init__() self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, stride=stride, bias=False) self.bn1 = nn.BatchNorm2d(planes) self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=1, padding=1, bias=False) self.bn2 = nn.BatchNorm2d(planes) self.conv3 = nn.Conv2d(planes, planes * 4, kernel_size=1, bias=False) self.bn3 = nn.BatchNorm2d(planes * 4) self.relu = nn.ReLU(inplace=True) self.downsample = downsample self.stride = stride def forward(self, x): residual = x out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) out = self.relu(out) out = self.conv3(out) out = self.bn3(out) if self.downsample is not None: residual = self.downsample(x) out += residual out = self.relu(out) return out class ResNet(nn.Module): def __init__(self, block, layers, num_classes=1000): #-----------------------------------# # 假设输入进来的图片是600,600,3 #-----------------------------------# self.inplanes = 64 super(ResNet, self).__init__() # 600,600,3 -> 300,300,64 self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False) self.bn1 = nn.BatchNorm2d(64) self.relu = nn.ReLU(inplace=True) # 300,300,64 -> 150,150,64 self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=0, ceil_mode=True) # 150,150,64 -> 150,150,256 self.layer1 = self._make_layer(block, 64, layers[0]) # 150,150,256 -> 75,75,512 self.layer2 = self._make_layer(block, 128, layers[1], stride=2) # 75,75,512 -> 38,38,1024 到这里可以获得一个38,38,1024的共享特征层 self.layer3 = self._make_layer(block, 256, layers[2], stride=2) # self.layer4被用在classifier模型中 self.layer4 = self._make_layer(block, 512, layers[3], stride=2) self.avgpool = nn.AvgPool2d(7) self.fc = nn.Linear(512 * block.expansion, num_classes) for m in self.modules(): if isinstance(m, nn.Conv2d): n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels m.weight.data.normal_(0, math.sqrt(2. / n)) elif isinstance(m, nn.BatchNorm2d): m.weight.data.fill_(1) m.bias.data.zero_() def _make_layer(self, block, planes, blocks, stride=1): downsample = None #-------------------------------------------------------------------# # 当模型需要进行高和宽的压缩的时候,就需要用到残差边的downsample #-------------------------------------------------------------------# if stride != 1 or self.inplanes != planes * block.expansion: downsample = nn.Sequential( nn.Conv2d(self.inplanes, planes * block.expansion,kernel_size=1, stride=stride, bias=False), nn.BatchNorm2d(planes * block.expansion), ) layers = [] layers.append(block(self.inplanes, planes, stride, downsample)) self.inplanes = planes * block.expansion for i in range(1, blocks): layers.append(block(self.inplanes, planes)) return nn.Sequential(*layers) def forward(self, x): x = self.conv1(x) x = self.bn1(x) x = self.relu(x) x = self.maxpool(x) x = self.layer1(x) x = self.layer2(x) x = self.layer3(x) x = self.layer4(x) x = self.avgpool(x) x = x.view(x.size(0), -1) x = self.fc(x) return x def resnet50(): model = ResNet(Bottleneck, [3, 4, 6, 3]) #----------------------------------------------------------------------------# # 获取特征提取部分,从conv1到model.layer3,最终获得一个38,38,1024的特征层 #----------------------------------------------------------------------------# features = list([model.conv1, model.bn1, model.relu, model.maxpool, model.layer1, model.layer2, model.layer3]) #----------------------------------------------------------------------------# # 获取分类部分,从model.layer4到model.avgpool #----------------------------------------------------------------------------# classifier = list([model.layer4, model.avgpool]) features = nn.Sequential(*features) classifier = nn.Sequential(*classifier) return features, classifier extractor,classifier = resnet50() print(extractor)Sequential( (0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False) (1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace=True) (3): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=True) (4): Sequential( (0): Bottleneck( (conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) (downsample): Sequential( (0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): Bottleneck( (conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (2): Bottleneck( (conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) ) (5): Sequential( (0): Bottleneck( (conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(2, 2), bias=False) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) (downsample): Sequential( (0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False) (1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): Bottleneck( (conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (2): Bottleneck( (conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (3): Bottleneck( (conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) ) (6): Sequential( (0): Bottleneck( (conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(2, 2), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) (downsample): Sequential( (0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False) (1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): Bottleneck( (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (2): Bottleneck( (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (3): Bottleneck( (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (4): Bottleneck( (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (5): Bottleneck( (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) ) )2.RPNimport numpy as np def generate_anchor_base(base_size=16, ratios=[0.5, 1, 2], anchor_scales=[8, 16, 32]): anchor_base = np.zeros((len(ratios) * len(anchor_scales), 4), dtype=np.float32) for i in range(len(ratios)): for j in range(len(anchor_scales)): h = base_size * anchor_scales[j] * np.sqrt(ratios[i]) w = base_size * anchor_scales[j] * np.sqrt(1. / ratios[i]) index = i * len(anchor_scales) + j anchor_base[index, 0] = - h / 2. anchor_base[index, 1] = - w / 2. anchor_base[index, 2] = h / 2. anchor_base[index, 3] = w / 2. return anchor_base # 产生特征图上每个点对应的9个base anchor def _enumerate_shifted_anchor(anchor_base, feat_stride, height, width): # 计算网格中心点 shift_x = np.arange(0, width * feat_stride, feat_stride) shift_y = np.arange(0, height * feat_stride, feat_stride) shift_x, shift_y = np.meshgrid(shift_x, shift_y) shift = np.stack((shift_x.ravel(),shift_y.ravel(), shift_x.ravel(),shift_y.ravel(),), axis=1) # 每个网格点上的9个先验框 A = anchor_base.shape[0] K = shift.shape[0] anchor = anchor_base.reshape((1, A, 4)) + \ shift.reshape((K, 1, 4)) # 所有的先验框 anchor = anchor.reshape((K * A, 4)).astype(np.float32) return anchor import matplotlib.pyplot as plt nine_anchors = generate_anchor_base() # 产生特征图上每个点对应的9个base anchor height, width, feat_stride = 38,38,16 # 特征图的shape feature_map_w,feature_map_h,feature_map_c = 38,38,16 # 生成整个特征图对应的所有的base anchor ,总计feature_map_w*feature_map_h*9个 anchors_all = _enumerate_shifted_anchor(nine_anchors,feat_stride,height,width) print(np.shape(anchors_all)) fig = plt.figure() ax = fig.add_subplot(111) plt.ylim(-300,900) plt.xlim(-300,900) # 模拟绘制特征提取之前的原图 shift_x = np.arange(0, width * feat_stride, feat_stride) shift_y = np.arange(0, height * feat_stride, feat_stride) shift_x, shift_y = np.meshgrid(shift_x, shift_y) plt.scatter(shift_x,shift_y) # 绘制特征图上像素点(pix_x,pix_y)对应原图的所有anchor pix_x,pix_y = 12,0 index_begin = pix_y*width*9 + pix_x*9 index_end = pix_y*width*9 + pix_x*9 + 9 print(index_begin) box_widths = anchors_all[:,2]-anchors_all[:,0] box_heights = anchors_all[:,3]-anchors_all[:,1] for i in range(index_begin,index_end): rect = plt.Rectangle([anchors_all[i, 0],anchors_all[i, 1]],box_widths[i],box_heights[i],color="r",fill=False) ax.add_patch(rect) plt.show()# 将RPN网络预测结果转化成建议框 def loc2bbox(src_bbox, loc): if src_bbox.size()[0] == 0: return torch.zeros((0, 4), dtype=loc.dtype) src_width = torch.unsqueeze(src_bbox[:, 2] - src_bbox[:, 0], -1) src_height = torch.unsqueeze(src_bbox[:, 3] - src_bbox[:, 1], -1) src_ctr_x = torch.unsqueeze(src_bbox[:, 0], -1) + 0.5 * src_width src_ctr_y = torch.unsqueeze(src_bbox[:, 1], -1) + 0.5 * src_height dx = loc[:, 0::4] dy = loc[:, 1::4] dw = loc[:, 2::4] dh = loc[:, 3::4] ctr_x = dx * src_width + src_ctr_x ctr_y = dy * src_height + src_ctr_y w = torch.exp(dw) * src_width h = torch.exp(dh) * src_height dst_bbox = torch.zeros_like(loc) dst_bbox[:, 0::4] = ctr_x - 0.5 * w dst_bbox[:, 1::4] = ctr_y - 0.5 * h dst_bbox[:, 2::4] = ctr_x + 0.5 * w dst_bbox[:, 3::4] = ctr_y + 0.5 * h return dst_bboxclass ProposalCreator(): def __init__(self, mode, nms_thresh=0.7, n_train_pre_nms=12000, n_train_post_nms=600, n_test_pre_nms=3000, n_test_post_nms=300, min_size=16): self.mode = mode self.nms_thresh = nms_thresh self.n_train_pre_nms = n_train_pre_nms self.n_train_post_nms = n_train_post_nms self.n_test_pre_nms = n_test_pre_nms self.n_test_post_nms = n_test_post_nms self.min_size = min_size def __call__(self, loc, score, anchor, img_size, scale=1.): if self.mode == "training": n_pre_nms = self.n_train_pre_nms n_post_nms = self.n_train_post_nms else: n_pre_nms = self.n_test_pre_nms n_post_nms = self.n_test_post_nms anchor = torch.from_numpy(anchor) if loc.is_cuda: anchor = anchor.cuda() #-----------------------------------# # 将RPN网络预测结果转化成建议框 #-----------------------------------# roi = loc2bbox(anchor, loc) #-----------------------------------# # 防止建议框超出图像边缘 #-----------------------------------# roi[:, [0, 2]] = torch.clamp(roi[:, [0, 2]], min = 0, max = img_size[1]) roi[:, [1, 3]] = torch.clamp(roi[:, [1, 3]], min = 0, max = img_size[0]) #-----------------------------------# # 建议框的宽高的最小值不可以小于16 #-----------------------------------# min_size = self.min_size * scale keep = torch.where(((roi[:, 2] - roi[:, 0]) >= min_size) & ((roi[:, 3] - roi[:, 1]) >= min_size))[0] roi = roi[keep, :] score = score[keep] #-----------------------------------# # 根据得分进行排序,取出建议框 #-----------------------------------# order = torch.argsort(score, descending=True) if n_pre_nms > 0: order = order[:n_pre_nms] roi = roi[order, :] score = score[order] #-----------------------------------# # 对建议框进行非极大抑制 #-----------------------------------# keep = nms(roi, score, self.nms_thresh) keep = keep[:n_post_nms] roi = roi[keep] return roi3.合并backbone与rpn--记为FRCNN_RPNclass FRCNN_RPN(nn.Module): def __init__(self,extractor,rpn): super(FRCNN_RPN, self).__init__() self.extractor = extractor self.rpn = rpn def forward(self, x, img_size): print(img_size) feature_map = self.extractor(x) rpn_locs, rpn_scores, rois, roi_indices, anchor = self.rpn(feature_map,img_size) return rpn_locs, rpn_scores, rois, roi_indices, anchor# 加载模型参数 param = torch.load("./frcnn-restnet50.pth") param.keys() rpn = RegionProposalNetwork(in_channels=1024,mode="predict") frcnn_rpn = FRCNN_RPN(extractor,rpn) frcnn_rpn_state_dict = frcnn_rpn.state_dict() for key in frcnn_rpn_state_dict.keys(): frcnn_rpn_state_dict[key] = param[key] frcnn_rpn.load_state_dict(frcnn_rpn_state_dict)from PIL import Image import copy def get_new_img_size(width, height, img_min_side=600): if width <= height: f = float(img_min_side) / width resized_height = int(f * height) resized_width = int(img_min_side) else: f = float(img_min_side) / height resized_width = int(f * width) resized_height = int(img_min_side) return resized_width, resized_height img_path = os.path.join("xx.jpg") image = Image.open(img_path) image = image.convert("RGB") # 转换成RGB图片,可以用于灰度图预测。 image_shape = np.array(np.shape(image)[0:2]) old_width, old_height = image_shape[1], image_shape[0] old_image = copy.deepcopy(image) # 给原图像进行resize,resize到短边为600的大小上 width,height = get_new_img_size(old_width, old_height) image = image.resize([width,height], Image.BICUBIC) print(image.size) # 图片预处理,归一化。 photo = np.transpose(np.array(image,dtype = np.float32)/255, (2, 0, 1)) with torch.no_grad(): images = torch.from_numpy(np.asarray([photo])) rpn_locs, rpn_scores, rois, roi_indices, anchor = frcnn_rpn(images,[height,width]) fig = plt.figure(dpi=200) ax = fig.add_subplot(111) ax.imshow(image) # 绘制RPN的结果 for i in range(rois.shape[0]): x1,y1,x2,y2 = rois[i] w,h = x2-x1,y2-y1 rect = plt.Rectangle([x1,y1],w,h,color="r",fill=False) ax.add_patch(rect) plt.xticks([]) plt.yticks([]) plt.show() print(anchor.shape) print(rois.shape)

fastrcnn网络结构复现 1.backbone-restnet50import math import torch.nn as nn class Bottleneck(nn.Module): expansion = 4 def __init__(self, inplanes, planes, stride=1, downsample=None): super(Bottleneck, self).__init__() self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, stride=stride, bias=False) self.bn1 = nn.BatchNorm2d(planes) self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=1, padding=1, bias=False) self.bn2 = nn.BatchNorm2d(planes) self.conv3 = nn.Conv2d(planes, planes * 4, kernel_size=1, bias=False) self.bn3 = nn.BatchNorm2d(planes * 4) self.relu = nn.ReLU(inplace=True) self.downsample = downsample self.stride = stride def forward(self, x): residual = x out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) out = self.relu(out) out = self.conv3(out) out = self.bn3(out) if self.downsample is not None: residual = self.downsample(x) out += residual out = self.relu(out) return out class ResNet(nn.Module): def __init__(self, block, layers, num_classes=1000): #-----------------------------------# # 假设输入进来的图片是600,600,3 #-----------------------------------# self.inplanes = 64 super(ResNet, self).__init__() # 600,600,3 -> 300,300,64 self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False) self.bn1 = nn.BatchNorm2d(64) self.relu = nn.ReLU(inplace=True) # 300,300,64 -> 150,150,64 self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=0, ceil_mode=True) # 150,150,64 -> 150,150,256 self.layer1 = self._make_layer(block, 64, layers[0]) # 150,150,256 -> 75,75,512 self.layer2 = self._make_layer(block, 128, layers[1], stride=2) # 75,75,512 -> 38,38,1024 到这里可以获得一个38,38,1024的共享特征层 self.layer3 = self._make_layer(block, 256, layers[2], stride=2) # self.layer4被用在classifier模型中 self.layer4 = self._make_layer(block, 512, layers[3], stride=2) self.avgpool = nn.AvgPool2d(7) self.fc = nn.Linear(512 * block.expansion, num_classes) for m in self.modules(): if isinstance(m, nn.Conv2d): n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels m.weight.data.normal_(0, math.sqrt(2. / n)) elif isinstance(m, nn.BatchNorm2d): m.weight.data.fill_(1) m.bias.data.zero_() def _make_layer(self, block, planes, blocks, stride=1): downsample = None #-------------------------------------------------------------------# # 当模型需要进行高和宽的压缩的时候,就需要用到残差边的downsample #-------------------------------------------------------------------# if stride != 1 or self.inplanes != planes * block.expansion: downsample = nn.Sequential( nn.Conv2d(self.inplanes, planes * block.expansion,kernel_size=1, stride=stride, bias=False), nn.BatchNorm2d(planes * block.expansion), ) layers = [] layers.append(block(self.inplanes, planes, stride, downsample)) self.inplanes = planes * block.expansion for i in range(1, blocks): layers.append(block(self.inplanes, planes)) return nn.Sequential(*layers) def forward(self, x): x = self.conv1(x) x = self.bn1(x) x = self.relu(x) x = self.maxpool(x) x = self.layer1(x) x = self.layer2(x) x = self.layer3(x) x = self.layer4(x) x = self.avgpool(x) x = x.view(x.size(0), -1) x = self.fc(x) return x def resnet50(): model = ResNet(Bottleneck, [3, 4, 6, 3]) #----------------------------------------------------------------------------# # 获取特征提取部分,从conv1到model.layer3,最终获得一个38,38,1024的特征层 #----------------------------------------------------------------------------# features = list([model.conv1, model.bn1, model.relu, model.maxpool, model.layer1, model.layer2, model.layer3]) #----------------------------------------------------------------------------# # 获取分类部分,从model.layer4到model.avgpool #----------------------------------------------------------------------------# classifier = list([model.layer4, model.avgpool]) features = nn.Sequential(*features) classifier = nn.Sequential(*classifier) return features, classifier extractor,classifier = resnet50() print(extractor)Sequential( (0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False) (1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (2): ReLU(inplace=True) (3): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=True) (4): Sequential( (0): Bottleneck( (conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) (downsample): Sequential( (0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): Bottleneck( (conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (2): Bottleneck( (conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) ) (5): Sequential( (0): Bottleneck( (conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(2, 2), bias=False) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) (downsample): Sequential( (0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False) (1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): Bottleneck( (conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (2): Bottleneck( (conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (3): Bottleneck( (conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) ) (6): Sequential( (0): Bottleneck( (conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(2, 2), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) (downsample): Sequential( (0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False) (1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) ) ) (1): Bottleneck( (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (2): Bottleneck( (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (3): Bottleneck( (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (4): Bottleneck( (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) (5): Bottleneck( (conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False) (bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False) (bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (relu): ReLU(inplace=True) ) ) )2.RPNimport numpy as np def generate_anchor_base(base_size=16, ratios=[0.5, 1, 2], anchor_scales=[8, 16, 32]): anchor_base = np.zeros((len(ratios) * len(anchor_scales), 4), dtype=np.float32) for i in range(len(ratios)): for j in range(len(anchor_scales)): h = base_size * anchor_scales[j] * np.sqrt(ratios[i]) w = base_size * anchor_scales[j] * np.sqrt(1. / ratios[i]) index = i * len(anchor_scales) + j anchor_base[index, 0] = - h / 2. anchor_base[index, 1] = - w / 2. anchor_base[index, 2] = h / 2. anchor_base[index, 3] = w / 2. return anchor_base # 产生特征图上每个点对应的9个base anchor def _enumerate_shifted_anchor(anchor_base, feat_stride, height, width): # 计算网格中心点 shift_x = np.arange(0, width * feat_stride, feat_stride) shift_y = np.arange(0, height * feat_stride, feat_stride) shift_x, shift_y = np.meshgrid(shift_x, shift_y) shift = np.stack((shift_x.ravel(),shift_y.ravel(), shift_x.ravel(),shift_y.ravel(),), axis=1) # 每个网格点上的9个先验框 A = anchor_base.shape[0] K = shift.shape[0] anchor = anchor_base.reshape((1, A, 4)) + \ shift.reshape((K, 1, 4)) # 所有的先验框 anchor = anchor.reshape((K * A, 4)).astype(np.float32) return anchor import matplotlib.pyplot as plt nine_anchors = generate_anchor_base() # 产生特征图上每个点对应的9个base anchor height, width, feat_stride = 38,38,16 # 特征图的shape feature_map_w,feature_map_h,feature_map_c = 38,38,16 # 生成整个特征图对应的所有的base anchor ,总计feature_map_w*feature_map_h*9个 anchors_all = _enumerate_shifted_anchor(nine_anchors,feat_stride,height,width) print(np.shape(anchors_all)) fig = plt.figure() ax = fig.add_subplot(111) plt.ylim(-300,900) plt.xlim(-300,900) # 模拟绘制特征提取之前的原图 shift_x = np.arange(0, width * feat_stride, feat_stride) shift_y = np.arange(0, height * feat_stride, feat_stride) shift_x, shift_y = np.meshgrid(shift_x, shift_y) plt.scatter(shift_x,shift_y) # 绘制特征图上像素点(pix_x,pix_y)对应原图的所有anchor pix_x,pix_y = 12,0 index_begin = pix_y*width*9 + pix_x*9 index_end = pix_y*width*9 + pix_x*9 + 9 print(index_begin) box_widths = anchors_all[:,2]-anchors_all[:,0] box_heights = anchors_all[:,3]-anchors_all[:,1] for i in range(index_begin,index_end): rect = plt.Rectangle([anchors_all[i, 0],anchors_all[i, 1]],box_widths[i],box_heights[i],color="r",fill=False) ax.add_patch(rect) plt.show()# 将RPN网络预测结果转化成建议框 def loc2bbox(src_bbox, loc): if src_bbox.size()[0] == 0: return torch.zeros((0, 4), dtype=loc.dtype) src_width = torch.unsqueeze(src_bbox[:, 2] - src_bbox[:, 0], -1) src_height = torch.unsqueeze(src_bbox[:, 3] - src_bbox[:, 1], -1) src_ctr_x = torch.unsqueeze(src_bbox[:, 0], -1) + 0.5 * src_width src_ctr_y = torch.unsqueeze(src_bbox[:, 1], -1) + 0.5 * src_height dx = loc[:, 0::4] dy = loc[:, 1::4] dw = loc[:, 2::4] dh = loc[:, 3::4] ctr_x = dx * src_width + src_ctr_x ctr_y = dy * src_height + src_ctr_y w = torch.exp(dw) * src_width h = torch.exp(dh) * src_height dst_bbox = torch.zeros_like(loc) dst_bbox[:, 0::4] = ctr_x - 0.5 * w dst_bbox[:, 1::4] = ctr_y - 0.5 * h dst_bbox[:, 2::4] = ctr_x + 0.5 * w dst_bbox[:, 3::4] = ctr_y + 0.5 * h return dst_bboxclass ProposalCreator(): def __init__(self, mode, nms_thresh=0.7, n_train_pre_nms=12000, n_train_post_nms=600, n_test_pre_nms=3000, n_test_post_nms=300, min_size=16): self.mode = mode self.nms_thresh = nms_thresh self.n_train_pre_nms = n_train_pre_nms self.n_train_post_nms = n_train_post_nms self.n_test_pre_nms = n_test_pre_nms self.n_test_post_nms = n_test_post_nms self.min_size = min_size def __call__(self, loc, score, anchor, img_size, scale=1.): if self.mode == "training": n_pre_nms = self.n_train_pre_nms n_post_nms = self.n_train_post_nms else: n_pre_nms = self.n_test_pre_nms n_post_nms = self.n_test_post_nms anchor = torch.from_numpy(anchor) if loc.is_cuda: anchor = anchor.cuda() #-----------------------------------# # 将RPN网络预测结果转化成建议框 #-----------------------------------# roi = loc2bbox(anchor, loc) #-----------------------------------# # 防止建议框超出图像边缘 #-----------------------------------# roi[:, [0, 2]] = torch.clamp(roi[:, [0, 2]], min = 0, max = img_size[1]) roi[:, [1, 3]] = torch.clamp(roi[:, [1, 3]], min = 0, max = img_size[0]) #-----------------------------------# # 建议框的宽高的最小值不可以小于16 #-----------------------------------# min_size = self.min_size * scale keep = torch.where(((roi[:, 2] - roi[:, 0]) >= min_size) & ((roi[:, 3] - roi[:, 1]) >= min_size))[0] roi = roi[keep, :] score = score[keep] #-----------------------------------# # 根据得分进行排序,取出建议框 #-----------------------------------# order = torch.argsort(score, descending=True) if n_pre_nms > 0: order = order[:n_pre_nms] roi = roi[order, :] score = score[order] #-----------------------------------# # 对建议框进行非极大抑制 #-----------------------------------# keep = nms(roi, score, self.nms_thresh) keep = keep[:n_post_nms] roi = roi[keep] return roi3.合并backbone与rpn--记为FRCNN_RPNclass FRCNN_RPN(nn.Module): def __init__(self,extractor,rpn): super(FRCNN_RPN, self).__init__() self.extractor = extractor self.rpn = rpn def forward(self, x, img_size): print(img_size) feature_map = self.extractor(x) rpn_locs, rpn_scores, rois, roi_indices, anchor = self.rpn(feature_map,img_size) return rpn_locs, rpn_scores, rois, roi_indices, anchor# 加载模型参数 param = torch.load("./frcnn-restnet50.pth") param.keys() rpn = RegionProposalNetwork(in_channels=1024,mode="predict") frcnn_rpn = FRCNN_RPN(extractor,rpn) frcnn_rpn_state_dict = frcnn_rpn.state_dict() for key in frcnn_rpn_state_dict.keys(): frcnn_rpn_state_dict[key] = param[key] frcnn_rpn.load_state_dict(frcnn_rpn_state_dict)from PIL import Image import copy def get_new_img_size(width, height, img_min_side=600): if width <= height: f = float(img_min_side) / width resized_height = int(f * height) resized_width = int(img_min_side) else: f = float(img_min_side) / height resized_width = int(f * width) resized_height = int(img_min_side) return resized_width, resized_height img_path = os.path.join("xx.jpg") image = Image.open(img_path) image = image.convert("RGB") # 转换成RGB图片,可以用于灰度图预测。 image_shape = np.array(np.shape(image)[0:2]) old_width, old_height = image_shape[1], image_shape[0] old_image = copy.deepcopy(image) # 给原图像进行resize,resize到短边为600的大小上 width,height = get_new_img_size(old_width, old_height) image = image.resize([width,height], Image.BICUBIC) print(image.size) # 图片预处理,归一化。 photo = np.transpose(np.array(image,dtype = np.float32)/255, (2, 0, 1)) with torch.no_grad(): images = torch.from_numpy(np.asarray([photo])) rpn_locs, rpn_scores, rois, roi_indices, anchor = frcnn_rpn(images,[height,width]) fig = plt.figure(dpi=200) ax = fig.add_subplot(111) ax.imshow(image) # 绘制RPN的结果 for i in range(rois.shape[0]): x1,y1,x2,y2 = rois[i] w,h = x2-x1,y2-y1 rect = plt.Rectangle([x1,y1],w,h,color="r",fill=False) ax.add_patch(rect) plt.xticks([]) plt.yticks([]) plt.show() print(anchor.shape) print(rois.shape) -

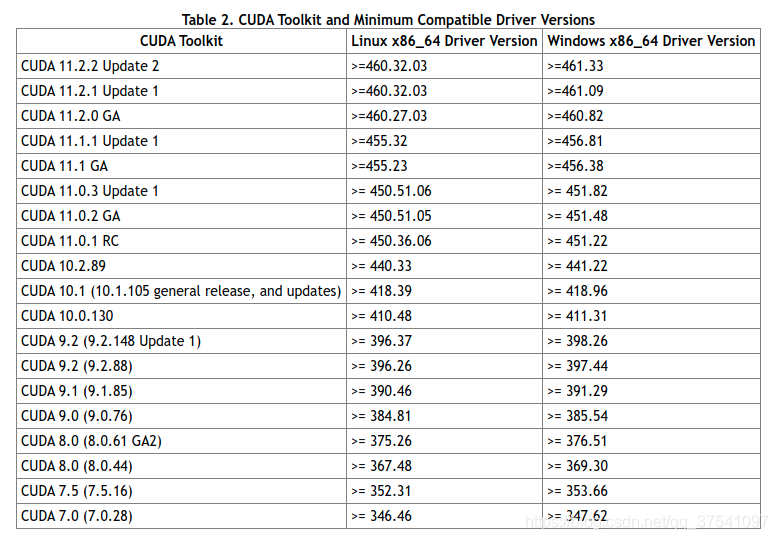

离线安装pytorch 离线安装pytorch针对网络不稳定在线安装总是中途失败的情况下采用离线安装的方式1.安装前准备1.1 安装显卡驱动略1.2 创建虚拟环境conda create -n torch1.9 python=3.8 conda activate torch1.92.离线安装2.1 版本对应关系2.2 离线下载安装包下载地址:download.pytorch.org/whl/torch_stable.html我们在这里可以找到我们需要的torch-1.9.0+cu111-cp38-cp38-linux_x86_64.whl以及torchvision-0.10.0+cu111-cp38-cp38-linux_x86_64.whl两个文件即可。注意,cu111代表CUDA11.1,cp38表示python3.8的编译环境,linux_x86_64表示x86的平台64位操作系统。下载完成后,我们将这两个文件传入你的离线主机(服务器)中。接着进入刚刚用conda创建好的虚拟环境后依次安装whl包:2.3 离线安装# CPU版 pip install torch-1.9.0+cpu-cp38-cp38-linux_x86_64.whl pip install torchvision-0.10.0+cpu-cp38-cp38-linux_x86_64.whl # GPU版 pip install torch-1.9.0+cu111-cp38-cp38-linux_x86_64.whl pip install torchvision-0.10.0+cu111-cp38-cp38-linux_x86_64.whl参考资料Pytorch1.9 CPU/GPU(CUDA11.1)安装_torch==1.9.0+cu111_太阳花的小绿豆的博客-CSDN博客

离线安装pytorch 离线安装pytorch针对网络不稳定在线安装总是中途失败的情况下采用离线安装的方式1.安装前准备1.1 安装显卡驱动略1.2 创建虚拟环境conda create -n torch1.9 python=3.8 conda activate torch1.92.离线安装2.1 版本对应关系2.2 离线下载安装包下载地址:download.pytorch.org/whl/torch_stable.html我们在这里可以找到我们需要的torch-1.9.0+cu111-cp38-cp38-linux_x86_64.whl以及torchvision-0.10.0+cu111-cp38-cp38-linux_x86_64.whl两个文件即可。注意,cu111代表CUDA11.1,cp38表示python3.8的编译环境,linux_x86_64表示x86的平台64位操作系统。下载完成后,我们将这两个文件传入你的离线主机(服务器)中。接着进入刚刚用conda创建好的虚拟环境后依次安装whl包:2.3 离线安装# CPU版 pip install torch-1.9.0+cpu-cp38-cp38-linux_x86_64.whl pip install torchvision-0.10.0+cpu-cp38-cp38-linux_x86_64.whl # GPU版 pip install torch-1.9.0+cu111-cp38-cp38-linux_x86_64.whl pip install torchvision-0.10.0+cu111-cp38-cp38-linux_x86_64.whl参考资料Pytorch1.9 CPU/GPU(CUDA11.1)安装_torch==1.9.0+cu111_太阳花的小绿豆的博客-CSDN博客 -

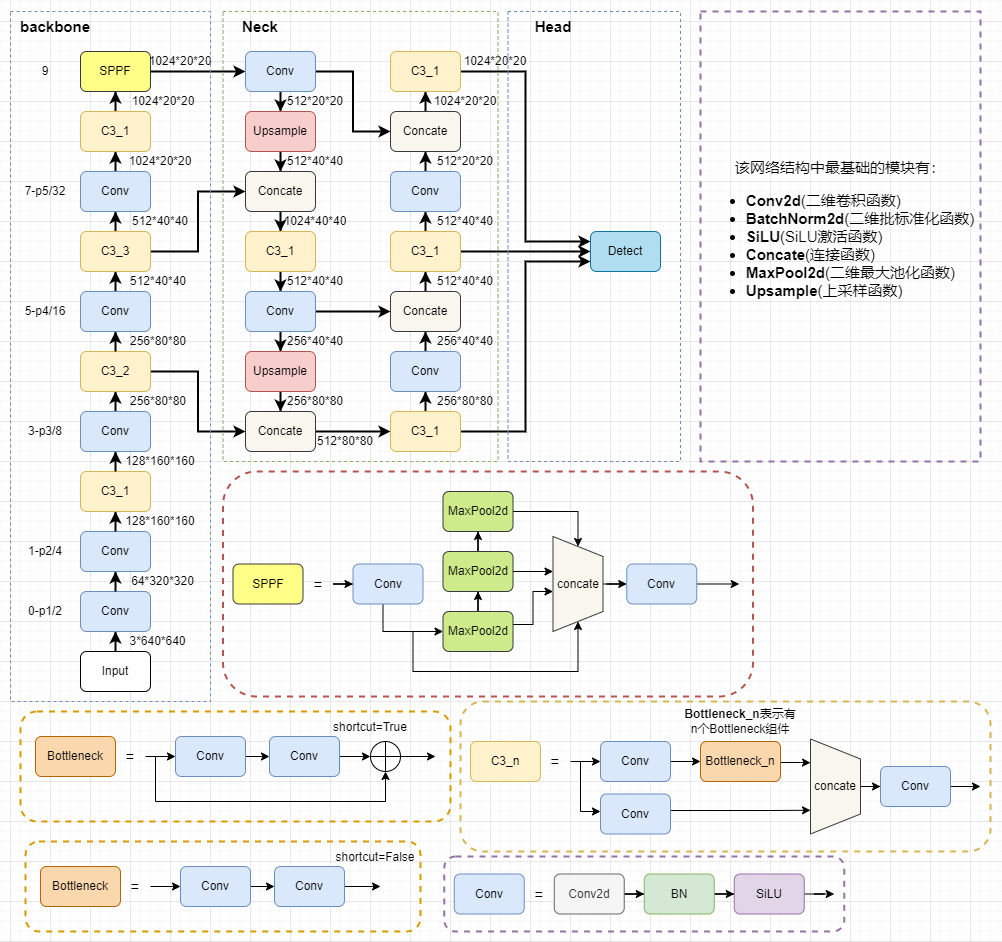

YOLOv5 四检测头配置 一、网络结构说明Yolov5原网络结构如下:增加一层检测层后,网络结构如下:(其中虚线表示删除的部分,细线表示增加的数据流动方向)二、网络配置第一步,在models文件夹下面创建yolov5s-add-one-layer.yaml文件。第二步,将下面的内容粘贴到新创建的文件中。# YOLOv5 by Ultralytics, GPL-3.0 license # Parameters nc: 2 # number of classes depth_multiple: 0.33 # model depth multiple width_multiple: 0.50 # layer channel multiple anchors: - [4,5, 8,10, 22,18] # P2/4 - [10,13, 16,30, 33,23] # P3/8 - [30,61, 62,45, 59,119] # P4/16 - [116,90, 156,198, 373,326] # P5/32 # YOLOv5 v6.0 backbone backbone: # [from, number, module, args] [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2 [-1, 1, Conv, [128, 3, 2]], # 1-P2/4 [-1, 3, C3, [128]], [-1, 1, Conv, [256, 3, 2]], # 3-P3/8 [-1, 6, C3, [256]], [-1, 1, Conv, [512, 3, 2]], # 5-P4/16 [-1, 9, C3, [512]], [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32 [-1, 3, C3, [1024]], [-1, 1, SPPF, [1024, 5]], # 9 ] # YOLOv5 v6.0 head head: [[-1, 1, Conv, [512, 1, 1]], [-1, 1, nn.Upsample, [None, 2, 'nearest']], [[-1, 6], 1, Concat, [1]], # cat backbone P4 [-1, 3, C3, [512, False]], # 13 [-1, 1, Conv, [256, 1, 1]], [-1, 1, nn.Upsample, [None, 2, 'nearest']], [[-1, 4], 1, Concat, [1]], # cat backbone P3 # add feature extration layer [-1, 3, C3, [256, False]], # 17 [-1, 1, Conv, [128, 1, 1]], [-1, 1, nn.Upsample, [None, 2, 'nearest']], [[-1, 2], 1, Concat, [1]], # cat backbone P3 # add detect layer [-1, 3, C3, [128, False]], # 21 (P4/4-minium) [-1, 1, Conv, [128, 3, 2]], [[-1, 18], 1, Concat, [1]], # cat head P3 # end [-1, 3, C3, [256, False]], # 24 (P3/8-small) [-1, 1, Conv, [256, 3, 2]], [[-1, 14], 1, Concat, [1]], # cat head P4 [-1, 3, C3, [512, False]], # 27 (P4/16-medium) [-1, 1, Conv, [512, 3, 2]], [[-1, 10], 1, Concat, [1]], # cat head P5 [-1, 3, C3, [1024, False]], # 30 (P5/32-large) [[21, 24, 27, 30], 1, Detect, [nc, anchors]], # Detect(P2, P3, P4, P5) ]第三步,正常执行新模型的训练流程,参考:快速使用YOLOv5进行训练VOC格式的数据集 - jupiter's blog (inat.top)。参考资料【Yolov5】Yolov5添加检测层,四层结构对小目标、密集场景更友好_yolov5增加小目标检测层-CSDN博客

YOLOv5 四检测头配置 一、网络结构说明Yolov5原网络结构如下:增加一层检测层后,网络结构如下:(其中虚线表示删除的部分,细线表示增加的数据流动方向)二、网络配置第一步,在models文件夹下面创建yolov5s-add-one-layer.yaml文件。第二步,将下面的内容粘贴到新创建的文件中。# YOLOv5 by Ultralytics, GPL-3.0 license # Parameters nc: 2 # number of classes depth_multiple: 0.33 # model depth multiple width_multiple: 0.50 # layer channel multiple anchors: - [4,5, 8,10, 22,18] # P2/4 - [10,13, 16,30, 33,23] # P3/8 - [30,61, 62,45, 59,119] # P4/16 - [116,90, 156,198, 373,326] # P5/32 # YOLOv5 v6.0 backbone backbone: # [from, number, module, args] [[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2 [-1, 1, Conv, [128, 3, 2]], # 1-P2/4 [-1, 3, C3, [128]], [-1, 1, Conv, [256, 3, 2]], # 3-P3/8 [-1, 6, C3, [256]], [-1, 1, Conv, [512, 3, 2]], # 5-P4/16 [-1, 9, C3, [512]], [-1, 1, Conv, [1024, 3, 2]], # 7-P5/32 [-1, 3, C3, [1024]], [-1, 1, SPPF, [1024, 5]], # 9 ] # YOLOv5 v6.0 head head: [[-1, 1, Conv, [512, 1, 1]], [-1, 1, nn.Upsample, [None, 2, 'nearest']], [[-1, 6], 1, Concat, [1]], # cat backbone P4 [-1, 3, C3, [512, False]], # 13 [-1, 1, Conv, [256, 1, 1]], [-1, 1, nn.Upsample, [None, 2, 'nearest']], [[-1, 4], 1, Concat, [1]], # cat backbone P3 # add feature extration layer [-1, 3, C3, [256, False]], # 17 [-1, 1, Conv, [128, 1, 1]], [-1, 1, nn.Upsample, [None, 2, 'nearest']], [[-1, 2], 1, Concat, [1]], # cat backbone P3 # add detect layer [-1, 3, C3, [128, False]], # 21 (P4/4-minium) [-1, 1, Conv, [128, 3, 2]], [[-1, 18], 1, Concat, [1]], # cat head P3 # end [-1, 3, C3, [256, False]], # 24 (P3/8-small) [-1, 1, Conv, [256, 3, 2]], [[-1, 14], 1, Concat, [1]], # cat head P4 [-1, 3, C3, [512, False]], # 27 (P4/16-medium) [-1, 1, Conv, [512, 3, 2]], [[-1, 10], 1, Concat, [1]], # cat head P5 [-1, 3, C3, [1024, False]], # 30 (P5/32-large) [[21, 24, 27, 30], 1, Detect, [nc, anchors]], # Detect(P2, P3, P4, P5) ]第三步,正常执行新模型的训练流程,参考:快速使用YOLOv5进行训练VOC格式的数据集 - jupiter's blog (inat.top)。参考资料【Yolov5】Yolov5添加检测层,四层结构对小目标、密集场景更友好_yolov5增加小目标检测层-CSDN博客 -

![[目标检测模型辅助数据标注]YOLOv5检测图片并将结果转为voc的xml格式](https://npm.elemecdn.com/typecho-joe-latest/assets/img/lazyload.jpg) [目标检测模型辅助数据标注]YOLOv5检测图片并将结果转为voc的xml格式 1.调用YOLOv5检测模型对图片文件夹执行检测并保存txt和confpython detect.py --weight runs\train\exp3\weights\best.pt --source [待检测的图片文件夹]--save-txt --save-conf2.将检测结果转为voc的xml格式import os import copy from xml.dom import minidom from tqdm import tqdm import cv2 import xmltodict # 图片\YOLO txt\xml对应的文件夹地址 base_dir = "[上面的待检测的图片文件夹]" img_dir = os.path.join(base_dir,"img") txt_dir = os.path.join(base_dir,"labels") xml_dir = os.path.join(base_dir,"xml") class_name_list = "YOLO项目中data.yaml的class_names" obj_base = { 'name':'name', 'bndbox':{ 'xmin':1, 'ymin':1, 'xmax':1, 'ymax':1 } } xml_base = { 'annotation': { 'folder':'img', 'filename':'', 'size':{ 'width':1, 'height':1, 'depth':3 }, 'object':[] } } img_name_list = os.listdir(img_dir) pbar = tqdm(total=len(img_name_list)) pbar.set_description("YOLOTXT2VOC:") for i in range(len(img_name_list)): # 拼接对应文件的地址 img_name = img_name_list[i] img_path = os.path.join(img_dir,img_name) txt_path = os.path.join(txt_dir,img_name.split(".")[0]+".txt") xml_path = os.path.join(xml_dir,img_name.split(".")[0]+".xml") # 初始化xml文件对象 xml_tmp = copy.deepcopy(xml_base) xml_tmp['annotation']['filename'] = img_name # 读取图片对应的宽高信息 img = cv2.imread(img_path) img_height,img_width = img.shape[:2] # 读取txt文件内容 with open(txt_path,'r') as f: content = f.read() # 逐行解析txt内容 for line in content.split("\n"): if not line:continue data_item_list = line.split(" ") # 跳过类别置信度小于0.5的 conf = float(data_item_list[5]) if(conf<0.5):continue # 单行txt转为xml中对应的obj obj_tmp = copy.deepcopy(obj_base) obj_tmp["name"] = class_name_list[int(data_item_list[0])] x_center = int(float(data_item_list[1])*img_width) y_center = int(float(data_item_list[2])*img_height) width = int(float(data_item_list[3])*img_width) height = int(float(data_item_list[4])*img_height) obj_tmp["bndbox"]["xmin"] = int(x_center-width/2.0) obj_tmp["bndbox"]["ymin"] = int(y_center-height/2.0) obj_tmp["bndbox"]["xmax"] = width + obj_tmp["bndbox"]["xmin"] obj_tmp["bndbox"]["ymax"] = height + obj_tmp["bndbox"]["ymin"] xml_tmp['annotation']['object'].append(obj_tmp) # 转为xml并写入对应文件 xmlstr = xmltodict.unparse(xml_tmp) xml = minidom.parseString(xmlstr) xml_pretty_str = xml.toprettyxml() with open(xml_path,"w") as f: f.write(xml_pretty_str) pbar.update(1) pbar.close()

[目标检测模型辅助数据标注]YOLOv5检测图片并将结果转为voc的xml格式 1.调用YOLOv5检测模型对图片文件夹执行检测并保存txt和confpython detect.py --weight runs\train\exp3\weights\best.pt --source [待检测的图片文件夹]--save-txt --save-conf2.将检测结果转为voc的xml格式import os import copy from xml.dom import minidom from tqdm import tqdm import cv2 import xmltodict # 图片\YOLO txt\xml对应的文件夹地址 base_dir = "[上面的待检测的图片文件夹]" img_dir = os.path.join(base_dir,"img") txt_dir = os.path.join(base_dir,"labels") xml_dir = os.path.join(base_dir,"xml") class_name_list = "YOLO项目中data.yaml的class_names" obj_base = { 'name':'name', 'bndbox':{ 'xmin':1, 'ymin':1, 'xmax':1, 'ymax':1 } } xml_base = { 'annotation': { 'folder':'img', 'filename':'', 'size':{ 'width':1, 'height':1, 'depth':3 }, 'object':[] } } img_name_list = os.listdir(img_dir) pbar = tqdm(total=len(img_name_list)) pbar.set_description("YOLOTXT2VOC:") for i in range(len(img_name_list)): # 拼接对应文件的地址 img_name = img_name_list[i] img_path = os.path.join(img_dir,img_name) txt_path = os.path.join(txt_dir,img_name.split(".")[0]+".txt") xml_path = os.path.join(xml_dir,img_name.split(".")[0]+".xml") # 初始化xml文件对象 xml_tmp = copy.deepcopy(xml_base) xml_tmp['annotation']['filename'] = img_name # 读取图片对应的宽高信息 img = cv2.imread(img_path) img_height,img_width = img.shape[:2] # 读取txt文件内容 with open(txt_path,'r') as f: content = f.read() # 逐行解析txt内容 for line in content.split("\n"): if not line:continue data_item_list = line.split(" ") # 跳过类别置信度小于0.5的 conf = float(data_item_list[5]) if(conf<0.5):continue # 单行txt转为xml中对应的obj obj_tmp = copy.deepcopy(obj_base) obj_tmp["name"] = class_name_list[int(data_item_list[0])] x_center = int(float(data_item_list[1])*img_width) y_center = int(float(data_item_list[2])*img_height) width = int(float(data_item_list[3])*img_width) height = int(float(data_item_list[4])*img_height) obj_tmp["bndbox"]["xmin"] = int(x_center-width/2.0) obj_tmp["bndbox"]["ymin"] = int(y_center-height/2.0) obj_tmp["bndbox"]["xmax"] = width + obj_tmp["bndbox"]["xmin"] obj_tmp["bndbox"]["ymax"] = height + obj_tmp["bndbox"]["ymin"] xml_tmp['annotation']['object'].append(obj_tmp) # 转为xml并写入对应文件 xmlstr = xmltodict.unparse(xml_tmp) xml = minidom.parseString(xmlstr) xml_pretty_str = xml.toprettyxml() with open(xml_path,"w") as f: f.write(xml_pretty_str) pbar.update(1) pbar.close() -

![[视频目标检测]:使用MEGA|DFF|FGFA训练自己的数据集](https://npm.elemecdn.com/typecho-joe-latest/assets/img/lazyload.jpg) [视频目标检测]:使用MEGA|DFF|FGFA训练自己的数据集 1.创建环境创建虚拟环境conda create --name MEGA -y python=3.7 source activate MEGA安装基础包conda install ipython pip pip install ninja yacs cython matplotlib tqdm opencv-python scipy export INSTALL_DIR=$PWD安装pytorch在安装pytorch的时候,原作者是这样的:conda install pytorch=1.3.0 torchvision cudatoolkit=10.0 -c pytorch但实际上使用cuda11.0+pytorch1.7也可以编译跑通,所以在这一步我们将其替换成:conda install pytorch==1.7.0 torchvision==0.8.0 torchaudio==0.7.0 cudatoolkit=11.0 -c pytorch然后就是作者使用到的coco数据集和cityperson数据集的安装:cd $INSTALL_DIR git clone https://github.com/cocodataset/cocoapi.git cd cocoapi/PythonAPId python setup.py build_ext install cd $INSTALL_DIR git clone https://github.com/mcordts/cityscapesScripts.git cd cityscapesScripts/ python setup.py build_ext install安装apex:(可省略) (建议省略,没省略运行报错)git clone https://github.com/NVIDIA/apex.git cd apex python setup.py install --cuda_ext --cpp_ext如果使用的是cuda11.0+pytorch1.7这里会报错deform_conv_cuda.cu(155): error: identifier "AT_CHECK " is undefined解决:在mega_core/csrc/cuda/deform_conv_cuda.cu 和 mega_core/csrc/cuda/deform_pool_cuda.cu文件的开头加上如下代码:#ifndef AT_CHECK #define AT_CHECK TORCH_CHECK #endif实际上原作者并没有使用到apex来进行混合精度训练,这一步也可省略,若省略的话在代码中需要修改几处地方:首先是mega_core/engine/trainer.py中的开头导入apex包注释掉,108-109行改为:losses.backward()还有tools/train_net.py中33行-36行注释掉# try: # from apex import amp # except ImportError: # raise ImportError('Use APEX for multi-precision via apex.amp') 50行也注释掉:#model, optimizer = amp.initialize(model, optimizer, opt_level=amp_opt_level)还有mega_core/layers/nms.py,注释掉第5行第8行改为:nms = _C.nms还有mega_core/layers/roi_align.py注释掉第10、57行还有mega_core/layers/roi_pool.py注释掉第10、56行这样应该就可以了。2.下载和初始化mega.pytorch# install PyTorch Detection cd $INSTALL_DIR git clone https://github.com/Scalsol/mega.pytorch.git cd mega.pytorch # the following will install the lib with # symbolic links, so that you can modify # the files if you want and won't need to # re-build it python setup.py build develop pip install 'pillow<7.0.0'3.制作自己的数据集参考作者提供的customize.md文件3.1 数据集格式参考:https://github.com/Scalsol/mega.pytorch/blob/master/CUSTOMIZE.md【注意事项】1.图片编号是从0开始的6位数字;(不想实现自己的数据加载器这是必要的)2.annotation内的xml文件与train、val钟文件一一对应。datasets ├── vid_custom | |── train | | |── video_snippet_1 | | | |── 000000.JPEG | | | |── 000001.JPEG | | | |── 000002.JPEG | | | ... | | |── video_snippet_2 | | | |── 000000.JPEG | | | |── 000001.JPEG | | | |── 000002.JPEG | | | ... | | ... | |── val | | |── video_snippet_1 | | | |── 000000.JPEG | | | |── 000001.JPEG | | | |── 000002.JPEG | | | ... | | |── video_snippet_2 | | | |── 000000.JPEG | | | |── 000001.JPEG | | | |── 000002.JPEG | | | ... | | ... | |── annotation | | |── train | | | |── video_snippet_1 | | | | |── 000000.xml | | | | |── 000001.xml | | | | |── 000002.xml | | | | ... | | | |── video_snippet_2 | | | | |── 000000.xml | | | | |── 000001.xml | | | | |── 000002.xml | | | | ... | | ... | | |── val | | | |── video_snippet_1 | | | | |── 000000.xml | | | | |── 000001.xml | | | | |── 000002.xml | | | | ... | | | |── video_snippet_2 | | | | |── 000000.xml | | | | |── 000001.xml | | | | |── 000002.xml | | | | ... | | ...3.2 准备自己txt文件具体参考源MEGA代码中datasets\ILSVRC2015\ImageSets提供的文档。格式:每一行4列依次代表:video folder, no meaning(just ignore it),frame number,video length;训练集VID_train.txt 对应vid_custom/train文件夹train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 10 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 30 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 50 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 70 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 90 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 110 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 130 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 150 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 170 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 190 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 210 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 230 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 250 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 270 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 290 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00001000 1 1 48 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00001000 1 4 48 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00001000 1 8 48 ···验证集VID_val.txt 对应vid_custom/val文件夹val/ILSVRC2015_val_00000000 1 0 464 val/ILSVRC2015_val_00000000 2 1 464 val/ILSVRC2015_val_00000000 3 2 464 val/ILSVRC2015_val_00000000 4 3 464 val/ILSVRC2015_val_00000000 5 4 464 val/ILSVRC2015_val_00000000 6 5 464 val/ILSVRC2015_val_00000000 7 6 464 val/ILSVRC2015_val_00000000 8 7 464 val/ILSVRC2015_val_00000000 9 8 464 val/ILSVRC2015_val_00000000 10 9 464 val/ILSVRC2015_val_00000000 11 10 464 val/ILSVRC2015_val_00000000 12 11 464 val/ILSVRC2015_val_00000000 13 12 464 val/ILSVRC2015_val_00000000 14 13 464 val/ILSVRC2015_val_00000000 15 14 464 val/ILSVRC2015_val_00000000 16 15 464 ···4.参数修改mega_core/data/datasets/vid.py修改VIDDataset内classes和classes_map:# classes=['__background__',#always index0 'car'] # classes_map=['__background__',# always index0 'n02958343'] # 自己标的数据集两个都填一样的就行 classes = ['__background__', # always index 0 'BridgeVehicle', 'Person', 'FollowMe', 'Plane', 'LuggageTruck', 'RefuelingTruck', 'FoodTruck', 'Tractor'] classes_map = ['__background__', # always index 0 'BridgeVehicle', 'Person', 'FollowMe', 'Plane', 'LuggageTruck', 'RefuelingTruck', 'FoodTruck', 'Tractor']mega_core/config/paths_catalog.py修改 DatasetCatalog.DATASETS,在变量的最后加上如下内容"vid_custom_train":{ "img_dir":"vid_custom/train", "anno_path":"vid_custom/annotation", "img_index":"vid_custom/VID_train.txt" }, "vid_custom_val":{ "img_dir":"vid_custom/val", "anno_path":"vid_custom/annotation", "img_index":"vid_custom/VID_val.txt" }修改if函数下if语句,添加上vid条件if ("DET" in name) or ("VID" in name) or ("vid" in name):修改configs/BASE_RCNN_1gpu.yaml(取决于你用几张gpu训练)NUM_CLASSES: 9#(物体类别数+背景) TRAIN: ("vid_custom_train",)#记得加“,” TEST: ("vid_custom_val",)#记得加“,”修改configs/MEGA/vid_R_101_C4_MEGA_1x.yamlDATASETS: TRAIN: ("vid_custom_train",)#记得加“,” TEST: ("vid_custom_val",)#记得加“,”5.训练和测试代码5.1 开始训练python -m torch.distributed.launch \ --nproc_per_node=1 \ tools/train_net.py \ --master_port=$((RANDOM + 10000)) \ --config-file configs/BASE_RCNN_1gpu.yaml \ OUTPUT_DIR training_dir/BASE_RCNNpython -m torch.distributed.launch \ --nproc_per_node=1 \ tools/train_net.py \ --master_port=$((RANDOM + 10000)) \ --config-file configs/DFF/vid_R_50_C4_DFF_1x.yaml \ OUTPUT_DIR training_dir/vid_R_50_C4_DFF_1x5.2 开始测试python -m torch.distributed.launch \ --nproc_per_node 1 \ tools/test_net.py \ --config-file configs/BASE_RCNN_1gpu.yaml \ MODEL.WEIGHT training_dir/BASE_RCNN/model_0020000.pth python tools/test_prediction.py \ --config-file configs/BASE_RCNN_1gpu.yaml \ --prediction ./ 参考资料MEGA训练自己的数据集-dockerhttps://github.com/Scalsol/mega.pytorch/issues/63

[视频目标检测]:使用MEGA|DFF|FGFA训练自己的数据集 1.创建环境创建虚拟环境conda create --name MEGA -y python=3.7 source activate MEGA安装基础包conda install ipython pip pip install ninja yacs cython matplotlib tqdm opencv-python scipy export INSTALL_DIR=$PWD安装pytorch在安装pytorch的时候,原作者是这样的:conda install pytorch=1.3.0 torchvision cudatoolkit=10.0 -c pytorch但实际上使用cuda11.0+pytorch1.7也可以编译跑通,所以在这一步我们将其替换成:conda install pytorch==1.7.0 torchvision==0.8.0 torchaudio==0.7.0 cudatoolkit=11.0 -c pytorch然后就是作者使用到的coco数据集和cityperson数据集的安装:cd $INSTALL_DIR git clone https://github.com/cocodataset/cocoapi.git cd cocoapi/PythonAPId python setup.py build_ext install cd $INSTALL_DIR git clone https://github.com/mcordts/cityscapesScripts.git cd cityscapesScripts/ python setup.py build_ext install安装apex:(可省略) (建议省略,没省略运行报错)git clone https://github.com/NVIDIA/apex.git cd apex python setup.py install --cuda_ext --cpp_ext如果使用的是cuda11.0+pytorch1.7这里会报错deform_conv_cuda.cu(155): error: identifier "AT_CHECK " is undefined解决:在mega_core/csrc/cuda/deform_conv_cuda.cu 和 mega_core/csrc/cuda/deform_pool_cuda.cu文件的开头加上如下代码:#ifndef AT_CHECK #define AT_CHECK TORCH_CHECK #endif实际上原作者并没有使用到apex来进行混合精度训练,这一步也可省略,若省略的话在代码中需要修改几处地方:首先是mega_core/engine/trainer.py中的开头导入apex包注释掉,108-109行改为:losses.backward()还有tools/train_net.py中33行-36行注释掉# try: # from apex import amp # except ImportError: # raise ImportError('Use APEX for multi-precision via apex.amp') 50行也注释掉:#model, optimizer = amp.initialize(model, optimizer, opt_level=amp_opt_level)还有mega_core/layers/nms.py,注释掉第5行第8行改为:nms = _C.nms还有mega_core/layers/roi_align.py注释掉第10、57行还有mega_core/layers/roi_pool.py注释掉第10、56行这样应该就可以了。2.下载和初始化mega.pytorch# install PyTorch Detection cd $INSTALL_DIR git clone https://github.com/Scalsol/mega.pytorch.git cd mega.pytorch # the following will install the lib with # symbolic links, so that you can modify # the files if you want and won't need to # re-build it python setup.py build develop pip install 'pillow<7.0.0'3.制作自己的数据集参考作者提供的customize.md文件3.1 数据集格式参考:https://github.com/Scalsol/mega.pytorch/blob/master/CUSTOMIZE.md【注意事项】1.图片编号是从0开始的6位数字;(不想实现自己的数据加载器这是必要的)2.annotation内的xml文件与train、val钟文件一一对应。datasets ├── vid_custom | |── train | | |── video_snippet_1 | | | |── 000000.JPEG | | | |── 000001.JPEG | | | |── 000002.JPEG | | | ... | | |── video_snippet_2 | | | |── 000000.JPEG | | | |── 000001.JPEG | | | |── 000002.JPEG | | | ... | | ... | |── val | | |── video_snippet_1 | | | |── 000000.JPEG | | | |── 000001.JPEG | | | |── 000002.JPEG | | | ... | | |── video_snippet_2 | | | |── 000000.JPEG | | | |── 000001.JPEG | | | |── 000002.JPEG | | | ... | | ... | |── annotation | | |── train | | | |── video_snippet_1 | | | | |── 000000.xml | | | | |── 000001.xml | | | | |── 000002.xml | | | | ... | | | |── video_snippet_2 | | | | |── 000000.xml | | | | |── 000001.xml | | | | |── 000002.xml | | | | ... | | ... | | |── val | | | |── video_snippet_1 | | | | |── 000000.xml | | | | |── 000001.xml | | | | |── 000002.xml | | | | ... | | | |── video_snippet_2 | | | | |── 000000.xml | | | | |── 000001.xml | | | | |── 000002.xml | | | | ... | | ...3.2 准备自己txt文件具体参考源MEGA代码中datasets\ILSVRC2015\ImageSets提供的文档。格式:每一行4列依次代表:video folder, no meaning(just ignore it),frame number,video length;训练集VID_train.txt 对应vid_custom/train文件夹train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 10 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 30 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 50 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 70 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 90 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 110 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 130 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 150 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 170 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 190 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 210 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 230 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 250 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 270 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00000000 1 290 300 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00001000 1 1 48 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00001000 1 4 48 train/ILSVRC2015_VID_train_0000/ILSVRC2015_train_00001000 1 8 48 ···验证集VID_val.txt 对应vid_custom/val文件夹val/ILSVRC2015_val_00000000 1 0 464 val/ILSVRC2015_val_00000000 2 1 464 val/ILSVRC2015_val_00000000 3 2 464 val/ILSVRC2015_val_00000000 4 3 464 val/ILSVRC2015_val_00000000 5 4 464 val/ILSVRC2015_val_00000000 6 5 464 val/ILSVRC2015_val_00000000 7 6 464 val/ILSVRC2015_val_00000000 8 7 464 val/ILSVRC2015_val_00000000 9 8 464 val/ILSVRC2015_val_00000000 10 9 464 val/ILSVRC2015_val_00000000 11 10 464 val/ILSVRC2015_val_00000000 12 11 464 val/ILSVRC2015_val_00000000 13 12 464 val/ILSVRC2015_val_00000000 14 13 464 val/ILSVRC2015_val_00000000 15 14 464 val/ILSVRC2015_val_00000000 16 15 464 ···4.参数修改mega_core/data/datasets/vid.py修改VIDDataset内classes和classes_map:# classes=['__background__',#always index0 'car'] # classes_map=['__background__',# always index0 'n02958343'] # 自己标的数据集两个都填一样的就行 classes = ['__background__', # always index 0 'BridgeVehicle', 'Person', 'FollowMe', 'Plane', 'LuggageTruck', 'RefuelingTruck', 'FoodTruck', 'Tractor'] classes_map = ['__background__', # always index 0 'BridgeVehicle', 'Person', 'FollowMe', 'Plane', 'LuggageTruck', 'RefuelingTruck', 'FoodTruck', 'Tractor']mega_core/config/paths_catalog.py修改 DatasetCatalog.DATASETS,在变量的最后加上如下内容"vid_custom_train":{ "img_dir":"vid_custom/train", "anno_path":"vid_custom/annotation", "img_index":"vid_custom/VID_train.txt" }, "vid_custom_val":{ "img_dir":"vid_custom/val", "anno_path":"vid_custom/annotation", "img_index":"vid_custom/VID_val.txt" }修改if函数下if语句,添加上vid条件if ("DET" in name) or ("VID" in name) or ("vid" in name):修改configs/BASE_RCNN_1gpu.yaml(取决于你用几张gpu训练)NUM_CLASSES: 9#(物体类别数+背景) TRAIN: ("vid_custom_train",)#记得加“,” TEST: ("vid_custom_val",)#记得加“,”修改configs/MEGA/vid_R_101_C4_MEGA_1x.yamlDATASETS: TRAIN: ("vid_custom_train",)#记得加“,” TEST: ("vid_custom_val",)#记得加“,”5.训练和测试代码5.1 开始训练python -m torch.distributed.launch \ --nproc_per_node=1 \ tools/train_net.py \ --master_port=$((RANDOM + 10000)) \ --config-file configs/BASE_RCNN_1gpu.yaml \ OUTPUT_DIR training_dir/BASE_RCNNpython -m torch.distributed.launch \ --nproc_per_node=1 \ tools/train_net.py \ --master_port=$((RANDOM + 10000)) \ --config-file configs/DFF/vid_R_50_C4_DFF_1x.yaml \ OUTPUT_DIR training_dir/vid_R_50_C4_DFF_1x5.2 开始测试python -m torch.distributed.launch \ --nproc_per_node 1 \ tools/test_net.py \ --config-file configs/BASE_RCNN_1gpu.yaml \ MODEL.WEIGHT training_dir/BASE_RCNN/model_0020000.pth python tools/test_prediction.py \ --config-file configs/BASE_RCNN_1gpu.yaml \ --prediction ./ 参考资料MEGA训练自己的数据集-dockerhttps://github.com/Scalsol/mega.pytorch/issues/63 -

快速使用mobiledets-yolov4 1.下载项目git clone https://github.com/inacmor/mobiledets-yolov4-pytorch.git2.准备数据2.1 准备VOC格式的数据集put your trainset and labels(xml) in data/Imgs and data/Annotations.这里选择直接将一个VOC格式的数据集通过软链接链接过来ln -s /data_jupiter/dataset/209_VOC_new/Annotations ./ ln -s /data_jupiter/dataset/209_VOC_new/JPEGImages ./Imgs 2.2 修改classes.txtLuggageVehicle BridgeVehicle Plane RefuelVehicle Person FoodVehicle LuggageVehicleHead FollowMe TractorVehicle3.开始训练3.0 环境安装安装pytorch(略)安装apexgit clone https://github.com/NVIDIA/apex cd apex python setup.py install3.1 训练前准备工作python ready.py python kmeans.py3.2 开始训练没有预训练模型的话需要将模型加载注释掉,修改train.py#model.load_state_dict(torch.load(pretrained_weights), strict=False)# 执行训练 python train.py

快速使用mobiledets-yolov4 1.下载项目git clone https://github.com/inacmor/mobiledets-yolov4-pytorch.git2.准备数据2.1 准备VOC格式的数据集put your trainset and labels(xml) in data/Imgs and data/Annotations.这里选择直接将一个VOC格式的数据集通过软链接链接过来ln -s /data_jupiter/dataset/209_VOC_new/Annotations ./ ln -s /data_jupiter/dataset/209_VOC_new/JPEGImages ./Imgs 2.2 修改classes.txtLuggageVehicle BridgeVehicle Plane RefuelVehicle Person FoodVehicle LuggageVehicleHead FollowMe TractorVehicle3.开始训练3.0 环境安装安装pytorch(略)安装apexgit clone https://github.com/NVIDIA/apex cd apex python setup.py install3.1 训练前准备工作python ready.py python kmeans.py3.2 开始训练没有预训练模型的话需要将模型加载注释掉,修改train.py#model.load_state_dict(torch.load(pretrained_weights), strict=False)# 执行训练 python train.py -

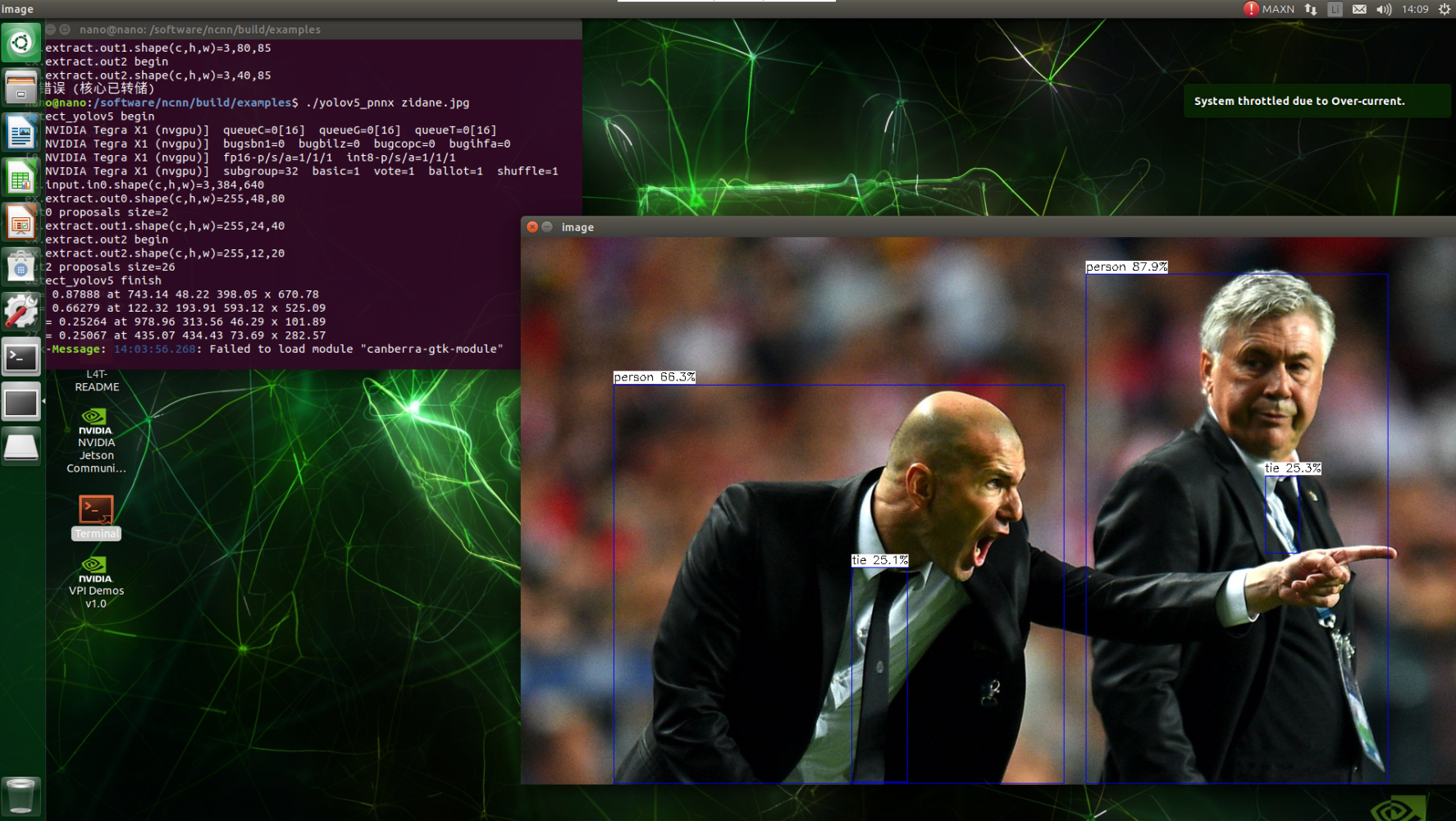

NCNN部署yolov5s 1.NCNN编译安装参考:Linux下如何安装ncnn2.模型转换(pt->onnx->ncnn)$\color{red}{此路不通,转出来的param文件中的Reshape的参数是错的}$2.1 pt模型转换onnx# pt-->onnx python export.py --weights yolov5s.pt --img 640 --batch 1#安装onnx-simplifier pip install onnx-simplifier # onnxsim 精简模型 python -m onnxsim yolov5s.onnx yolov5s-sim.onnx Simplifying... Finish! Here is the difference: ┏━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━┓ ┃ ┃ Original Model ┃ Simplified Model ┃ ┡━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━┩ │ Add │ 10 │ 10 │ │ Concat │ 17 │ 17 │ │ Constant │ 20 │ 0 │ │ Conv │ 60 │ 60 │ │ MaxPool │ 3 │ 3 │ │ Mul │ 69 │ 69 │ │ Pow │ 3 │ 3 │ │ Reshape │ 6 │ 6 │ │ Resize │ 2 │ 2 │ │ Sigmoid │ 60 │ 60 │ │ Split │ 3 │ 3 │ │ Transpose │ 3 │ 3 │ │ Model Size │ 28.0MiB │ 28.0MiB │ └────────────┴────────────────┴──────────────────┘2.2 使用onnx2ncnn.exe 转换模型把你的ncnn/build/tools/onnx加入到环境变量onnx2ncnn yolov5s-sim.onnx yolov5s_6.0.param yolov5s_6.0.bin2.3 调用测试将yolov5s_6.0.param 、yolov5s_6.0.bin模型copy到ncnn/build/examples/位置,运行下面命令./yolov5 image-path就会出现Segmentation fault (core dumped)的报错3.模型转换(pt->torchscript->ncnn)3.1 pt模型转换torchscript# pt-->torchscript python export.py --weights yolov5s.pt --include torchscript --train3.2 下载编译好的 pnnx 工具包执行转换pnnx下载地址:https://github.com/pnnx/pnnx执行转换,获得 yolov5s.ncnn.param 和 yolov5s.ncnn.bin 模型文件,指定 inputshape 并且额外指定 inputshape2 转换成支持动态 shape 输入的模型 ./pnnx yolov5s.torchscript inputshape=[1,3,640,640] inputshape2=[1,3,320,320]3.3 调用测试直接测试的相关文件下载:yolov5_pnnx.zip将 yolov5s.ncnn.param 和 yolov5s.ncnn.bin 模型copy到ncnn/build/examples/位置,运行下面命令./yolov5_pnnx image-path参考资料yolov5 模型部署NCNN(详细过程)Linux&Jetson Nano下编译安装ncnnYOLOv5转NCNN过程Jetson Nano 移植ncnn详细记录u版YOLOv5目标检测ncnn实现(第二版)

NCNN部署yolov5s 1.NCNN编译安装参考:Linux下如何安装ncnn2.模型转换(pt->onnx->ncnn)$\color{red}{此路不通,转出来的param文件中的Reshape的参数是错的}$2.1 pt模型转换onnx# pt-->onnx python export.py --weights yolov5s.pt --img 640 --batch 1#安装onnx-simplifier pip install onnx-simplifier # onnxsim 精简模型 python -m onnxsim yolov5s.onnx yolov5s-sim.onnx Simplifying... Finish! Here is the difference: ┏━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━┓ ┃ ┃ Original Model ┃ Simplified Model ┃ ┡━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━┩ │ Add │ 10 │ 10 │ │ Concat │ 17 │ 17 │ │ Constant │ 20 │ 0 │ │ Conv │ 60 │ 60 │ │ MaxPool │ 3 │ 3 │ │ Mul │ 69 │ 69 │ │ Pow │ 3 │ 3 │ │ Reshape │ 6 │ 6 │ │ Resize │ 2 │ 2 │ │ Sigmoid │ 60 │ 60 │ │ Split │ 3 │ 3 │ │ Transpose │ 3 │ 3 │ │ Model Size │ 28.0MiB │ 28.0MiB │ └────────────┴────────────────┴──────────────────┘2.2 使用onnx2ncnn.exe 转换模型把你的ncnn/build/tools/onnx加入到环境变量onnx2ncnn yolov5s-sim.onnx yolov5s_6.0.param yolov5s_6.0.bin2.3 调用测试将yolov5s_6.0.param 、yolov5s_6.0.bin模型copy到ncnn/build/examples/位置,运行下面命令./yolov5 image-path就会出现Segmentation fault (core dumped)的报错3.模型转换(pt->torchscript->ncnn)3.1 pt模型转换torchscript# pt-->torchscript python export.py --weights yolov5s.pt --include torchscript --train3.2 下载编译好的 pnnx 工具包执行转换pnnx下载地址:https://github.com/pnnx/pnnx执行转换,获得 yolov5s.ncnn.param 和 yolov5s.ncnn.bin 模型文件,指定 inputshape 并且额外指定 inputshape2 转换成支持动态 shape 输入的模型 ./pnnx yolov5s.torchscript inputshape=[1,3,640,640] inputshape2=[1,3,320,320]3.3 调用测试直接测试的相关文件下载:yolov5_pnnx.zip将 yolov5s.ncnn.param 和 yolov5s.ncnn.bin 模型copy到ncnn/build/examples/位置,运行下面命令./yolov5_pnnx image-path参考资料yolov5 模型部署NCNN(详细过程)Linux&Jetson Nano下编译安装ncnnYOLOv5转NCNN过程Jetson Nano 移植ncnn详细记录u版YOLOv5目标检测ncnn实现(第二版) -

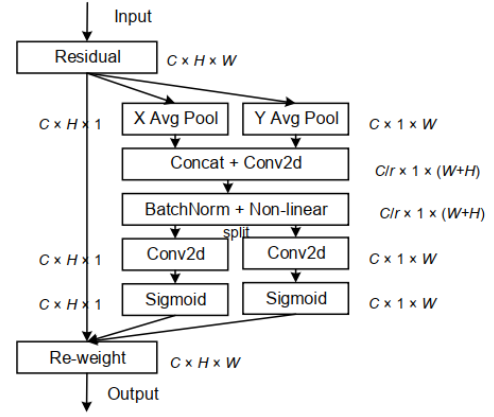

YOLOV5 加入注意力机制(以CA注意力机制为例) 0.CA注意力机制网络结构图1.在common.py中先添加你想添加的注意力模块### 常用注意力机制模块实现 class h_sigmoid(nn.Module): def __init__(self, inplace=True): super(h_sigmoid, self).__init__() self.relu = nn.ReLU6(inplace=inplace) def forward(self, x): return self.relu(x + 3) / 6 class h_swish(nn.Module): def __init__(self, inplace=True): super(h_swish, self).__init__() self.sigmoid = h_sigmoid(inplace=inplace) def forward(self, x): return x * self.sigmoid(x) class CoordAtt(nn.Module): def __init__(self, inp, oup, reduction=32): super(CoordAtt, self).__init__() self.pool_h = nn.AdaptiveAvgPool2d((None, 1)) self.pool_w = nn.AdaptiveAvgPool2d((1, None)) mip = max(8, inp // reduction) self.conv1 = nn.Conv2d(inp, mip, kernel_size=1, stride=1, padding=0) self.bn1 = nn.BatchNorm2d(mip) self.act = h_swish() self.conv_h = nn.Conv2d(mip, oup, kernel_size=1, stride=1, padding=0) self.conv_w = nn.Conv2d(mip, oup, kernel_size=1, stride=1, padding=0) def forward(self, x): identity = x n, c, h, w = x.size() x_h = self.pool_h(x) x_w = self.pool_w(x).permute(0, 1, 3, 2) y = torch.cat([x_h, x_w], dim=2) y = self.conv1(y) y = self.bn1(y) y = self.act(y) x_h, x_w = torch.split(y, [h, w], dim=2) x_w = x_w.permute(0, 1, 3, 2) a_h = self.conv_h(x_h).sigmoid() a_w = self.conv_w(x_w).sigmoid() out = identity * a_w * a_h return out class SELayer(nn.Module): def __init__(self, c1, r=16): super(SELayer, self).__init__() self.avgpool = nn.AdaptiveAvgPool2d(1) self.l1 = nn.Linear(c1, c1 // r, bias=False) self.relu = nn.ReLU(inplace=True) self.l2 = nn.Linear(c1 // r, c1, bias=False) self.sig = nn.Sigmoid() def forward(self, x): b, c, _, _ = x.size() y = self.avgpool(x).view(b, c) y = self.l1(y) y = self.relu(y) y = self.l2(y) y = self.sig(y) y = y.view(b, c, 1, 1) return x * y.expand_as(x) class eca_layer(nn.Module): """Constructs a ECA module. Args: channel: Number of channels of the input feature map k_size: Adaptive selection of kernel size """ def __init__(self, channel, k_size=3): super(eca_layer, self).__init__() self.avg_pool = nn.AdaptiveAvgPool2d(1) self.conv = nn.Conv1d(1, 1, kernel_size=k_size, padding=(k_size - 1) // 2, bias=False) self.sigmoid = nn.Sigmoid() def forward(self, x): # feature descriptor on the global spatial information y = self.avg_pool(x) # Two different branches of ECA module y = self.conv(y.squeeze(-1).transpose(-1, -2)).transpose(-1, -2).unsqueeze(-1) # Multi-scale information fusion y = self.sigmoid(y) x = x * y.expand_as(x) return x * y.expand_as(x) class ChannelAttention(nn.Module): def __init__(self, in_planes, ratio=16): super(ChannelAttention, self).__init__() self.avg_pool = nn.AdaptiveAvgPool2d(1) self.max_pool = nn.AdaptiveMaxPool2d(1) self.f1 = nn.Conv2d(in_planes, in_planes // ratio, 1, bias=False) self.relu = nn.ReLU() self.f2 = nn.Conv2d(in_planes // ratio, in_planes, 1, bias=False) # 写法二,亦可使用顺序容器 # self.sharedMLP = nn.Sequential( # nn.Conv2d(in_planes, in_planes // ratio, 1, bias=False), nn.ReLU(), # nn.Conv2d(in_planes // rotio, in_planes, 1, bias=False)) self.sigmoid = nn.Sigmoid() def forward(self, x): avg_out = self.f2(self.relu(self.f1(self.avg_pool(x)))) max_out = self.f2(self.relu(self.f1(self.max_pool(x)))) out = self.sigmoid(avg_out + max_out) return out class SpatialAttention(nn.Module): def __init__(self, kernel_size=7): super(SpatialAttention, self).__init__() assert kernel_size in (3, 7), 'kernel size must be 3 or 7' padding = 3 if kernel_size == 7 else 1 self.conv = nn.Conv2d(2, 1, kernel_size, padding=padding, bias=False) self.sigmoid = nn.Sigmoid() def forward(self, x): avg_out = torch.mean(x, dim=1, keepdim=True) max_out, _ = torch.max(x, dim=1, keepdim=True) x = torch.cat([avg_out, max_out], dim=1) x = self.conv(x) return self.sigmoid(x) class CBAMC3(nn.Module): # CSP Bottleneck with 3 convolutions def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion super(CBAMC3, self).__init__() c_ = int(c2 * e) # hidden channels self.cv1 = Conv(c1, c_, 1, 1) self.cv2 = Conv(c1, c_, 1, 1) self.cv3 = Conv(2 * c_, c2, 1) self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)]) self.channel_attention = ChannelAttention(c2, 16) self.spatial_attention = SpatialAttention(7) # self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)]) def forward(self, x): out = self.channel_attention(x) * x print('outchannels:{}'.format(out.shape)) out = self.spatial_attention(out) * out return out 2.修改yolo.py在def parse_model(d, ch):函数的代码中增加你想添加的注意力名称添加前 if m in [Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv, BottleneckCSP, C3, C3TR, C3SPP, C3Ghost]: c1, c2 = ch[f], args[0] if c2 != no: # if not output c2 = make_divisible(c2 * gw, 8)添加后 if m in [Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv, BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, CoordAtt]: c1, c2 = ch[f], args[0] if c2 != no: # if not output c2 = make_divisible(c2 * gw, 8)3.修改yaml文件/创建自定义的yaml文件示例-yolov5s-CA.yaml

YOLOV5 加入注意力机制(以CA注意力机制为例) 0.CA注意力机制网络结构图1.在common.py中先添加你想添加的注意力模块### 常用注意力机制模块实现 class h_sigmoid(nn.Module): def __init__(self, inplace=True): super(h_sigmoid, self).__init__() self.relu = nn.ReLU6(inplace=inplace) def forward(self, x): return self.relu(x + 3) / 6 class h_swish(nn.Module): def __init__(self, inplace=True): super(h_swish, self).__init__() self.sigmoid = h_sigmoid(inplace=inplace) def forward(self, x): return x * self.sigmoid(x) class CoordAtt(nn.Module): def __init__(self, inp, oup, reduction=32): super(CoordAtt, self).__init__() self.pool_h = nn.AdaptiveAvgPool2d((None, 1)) self.pool_w = nn.AdaptiveAvgPool2d((1, None)) mip = max(8, inp // reduction) self.conv1 = nn.Conv2d(inp, mip, kernel_size=1, stride=1, padding=0) self.bn1 = nn.BatchNorm2d(mip) self.act = h_swish() self.conv_h = nn.Conv2d(mip, oup, kernel_size=1, stride=1, padding=0) self.conv_w = nn.Conv2d(mip, oup, kernel_size=1, stride=1, padding=0) def forward(self, x): identity = x n, c, h, w = x.size() x_h = self.pool_h(x) x_w = self.pool_w(x).permute(0, 1, 3, 2) y = torch.cat([x_h, x_w], dim=2) y = self.conv1(y) y = self.bn1(y) y = self.act(y) x_h, x_w = torch.split(y, [h, w], dim=2) x_w = x_w.permute(0, 1, 3, 2) a_h = self.conv_h(x_h).sigmoid() a_w = self.conv_w(x_w).sigmoid() out = identity * a_w * a_h return out class SELayer(nn.Module): def __init__(self, c1, r=16): super(SELayer, self).__init__() self.avgpool = nn.AdaptiveAvgPool2d(1) self.l1 = nn.Linear(c1, c1 // r, bias=False) self.relu = nn.ReLU(inplace=True) self.l2 = nn.Linear(c1 // r, c1, bias=False) self.sig = nn.Sigmoid() def forward(self, x): b, c, _, _ = x.size() y = self.avgpool(x).view(b, c) y = self.l1(y) y = self.relu(y) y = self.l2(y) y = self.sig(y) y = y.view(b, c, 1, 1) return x * y.expand_as(x) class eca_layer(nn.Module): """Constructs a ECA module. Args: channel: Number of channels of the input feature map k_size: Adaptive selection of kernel size """ def __init__(self, channel, k_size=3): super(eca_layer, self).__init__() self.avg_pool = nn.AdaptiveAvgPool2d(1) self.conv = nn.Conv1d(1, 1, kernel_size=k_size, padding=(k_size - 1) // 2, bias=False) self.sigmoid = nn.Sigmoid() def forward(self, x): # feature descriptor on the global spatial information y = self.avg_pool(x) # Two different branches of ECA module y = self.conv(y.squeeze(-1).transpose(-1, -2)).transpose(-1, -2).unsqueeze(-1) # Multi-scale information fusion y = self.sigmoid(y) x = x * y.expand_as(x) return x * y.expand_as(x) class ChannelAttention(nn.Module): def __init__(self, in_planes, ratio=16): super(ChannelAttention, self).__init__() self.avg_pool = nn.AdaptiveAvgPool2d(1) self.max_pool = nn.AdaptiveMaxPool2d(1) self.f1 = nn.Conv2d(in_planes, in_planes // ratio, 1, bias=False) self.relu = nn.ReLU() self.f2 = nn.Conv2d(in_planes // ratio, in_planes, 1, bias=False) # 写法二,亦可使用顺序容器 # self.sharedMLP = nn.Sequential( # nn.Conv2d(in_planes, in_planes // ratio, 1, bias=False), nn.ReLU(), # nn.Conv2d(in_planes // rotio, in_planes, 1, bias=False)) self.sigmoid = nn.Sigmoid() def forward(self, x): avg_out = self.f2(self.relu(self.f1(self.avg_pool(x)))) max_out = self.f2(self.relu(self.f1(self.max_pool(x)))) out = self.sigmoid(avg_out + max_out) return out class SpatialAttention(nn.Module): def __init__(self, kernel_size=7): super(SpatialAttention, self).__init__() assert kernel_size in (3, 7), 'kernel size must be 3 or 7' padding = 3 if kernel_size == 7 else 1 self.conv = nn.Conv2d(2, 1, kernel_size, padding=padding, bias=False) self.sigmoid = nn.Sigmoid() def forward(self, x): avg_out = torch.mean(x, dim=1, keepdim=True) max_out, _ = torch.max(x, dim=1, keepdim=True) x = torch.cat([avg_out, max_out], dim=1) x = self.conv(x) return self.sigmoid(x) class CBAMC3(nn.Module): # CSP Bottleneck with 3 convolutions def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion super(CBAMC3, self).__init__() c_ = int(c2 * e) # hidden channels self.cv1 = Conv(c1, c_, 1, 1) self.cv2 = Conv(c1, c_, 1, 1) self.cv3 = Conv(2 * c_, c2, 1) self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)]) self.channel_attention = ChannelAttention(c2, 16) self.spatial_attention = SpatialAttention(7) # self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)]) def forward(self, x): out = self.channel_attention(x) * x print('outchannels:{}'.format(out.shape)) out = self.spatial_attention(out) * out return out 2.修改yolo.py在def parse_model(d, ch):函数的代码中增加你想添加的注意力名称添加前 if m in [Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv, BottleneckCSP, C3, C3TR, C3SPP, C3Ghost]: c1, c2 = ch[f], args[0] if c2 != no: # if not output c2 = make_divisible(c2 * gw, 8)添加后 if m in [Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv, BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, CoordAtt]: c1, c2 = ch[f], args[0] if c2 != no: # if not output c2 = make_divisible(c2 * gw, 8)3.修改yaml文件/创建自定义的yaml文件示例-yolov5s-CA.yaml -