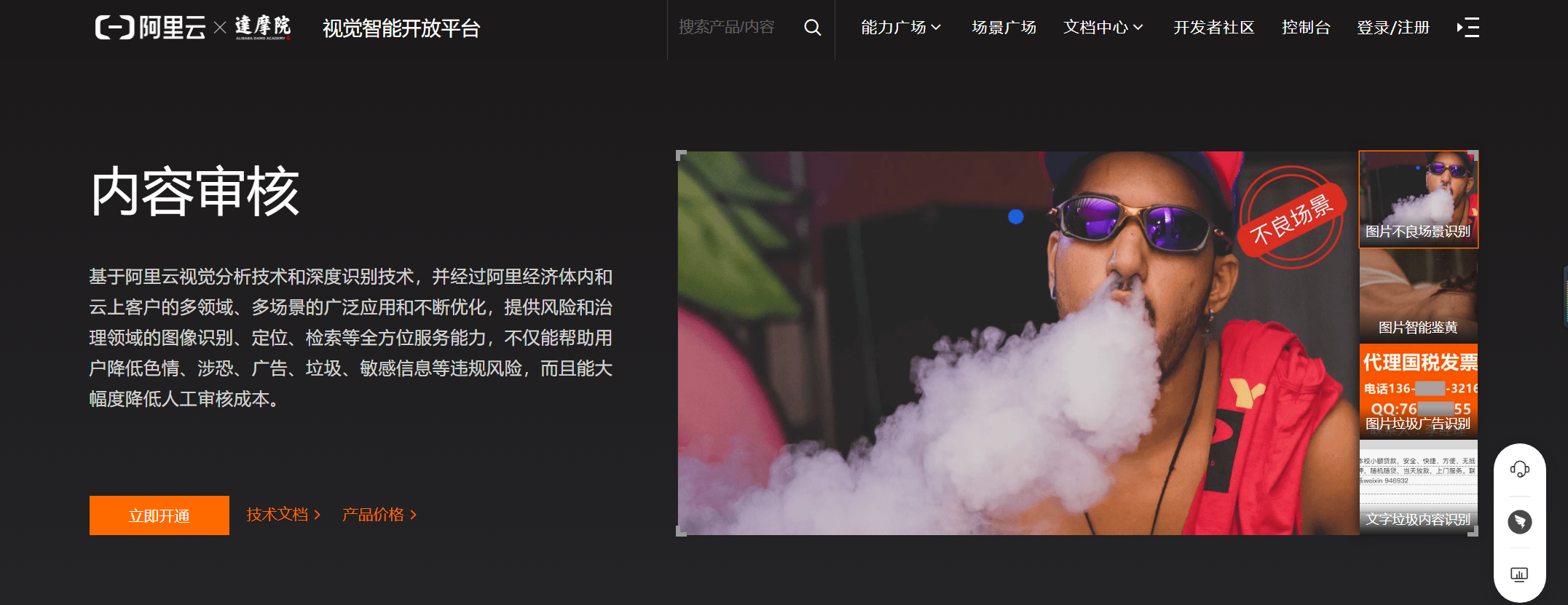

1.服务开通

地址:https://vision.aliyun.com/imageaudit?spm=5176.11065253.1411203.3.7e8153f6mehjzV

2.引入公共POM依赖

<!--json转换依赖-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>2.0.25</version>

</dependency>

<!--文字内容审核依赖及图片审核依赖共用-->

<dependency>

<groupId>com.aliyun</groupId>

<artifactId>imageaudit20191230</artifactId>

<version>2.0.6</version>

</dependency>3.文本审核

3.1 核心代码

private static final String accessKeyId = "<your-access-key-id>";

private static final String accessKeySecret = "<your-access-key-secret>";

@PostMapping("/scanText")

public String scanText(@RequestBody HashMap<String,String> reqMap) throws Exception {

// 获取待检测的文字

String text = reqMap.get("text");

System.out.println("text="+text);

// 返回结果的变量

Map<String,String> resMap = new HashMap<>();

//实例化客户端

Config config = new Config()

// 必填,您的 AccessKey ID

.setAccessKeyId(accessKeyId)

// 必填,您的 AccessKey Secret

.setAccessKeySecret(accessKeySecret);

config.endpoint = "imageaudit.cn-shanghai.aliyuncs.com";

Client client = new Client(config);

/**

* spam:文字垃圾内容识别

* politics:文字敏感内容识别

* abuse:文字辱骂内容识别

* terrorism:文字暴恐内容识别

* porn:文字鉴黄内容识别

* flood:文字灌水内容识别

* contraband:文字违禁内容识别

* ad:文字广告内容识别

*/

// 设置待检测类型

ScanTextRequest.ScanTextRequestLabels labels0 = new ScanTextRequest.ScanTextRequestLabels()

.setLabel("politics");

ScanTextRequest.ScanTextRequestLabels labels1 = new ScanTextRequest.ScanTextRequestLabels()

.setLabel("contraband");

ScanTextRequest.ScanTextRequestLabels labels2 = new ScanTextRequest.ScanTextRequestLabels()

.setLabel("terrorism");

ScanTextRequest.ScanTextRequestLabels labels3 = new ScanTextRequest.ScanTextRequestLabels()

.setLabel("abuse");

ScanTextRequest.ScanTextRequestLabels labels4 = new ScanTextRequest.ScanTextRequestLabels()

.setLabel("spam");

ScanTextRequest.ScanTextRequestLabels labels5 = new ScanTextRequest.ScanTextRequestLabels()

.setLabel("ad");

// 设置待检测内容

ScanTextRequest.ScanTextRequestTasks tasks0 = new ScanTextRequest.ScanTextRequestTasks()

.setContent(text);

ScanTextRequest scanTextRequest = new ScanTextRequest()

.setTasks(java.util.Arrays.asList(

tasks0

))

.setLabels(java.util.Arrays.asList(

labels0,

labels1,

labels2,

labels3,

labels4,

labels5

));

RuntimeOptions runtime = new RuntimeOptions();

ScanTextResponse response = null;

try {

// 复制代码运行请自行打印 API 的返回值

response = client.scanTextWithOptions(scanTextRequest, runtime);

resMap.put("data",JSON.toJSONString(response.getBody().getData().getElements().get(0).getResults()));

//调用后获取到他的返回对象, 然后判断我们的文字 是什么内容

List<ScanTextResponseBody.ScanTextResponseBodyDataElementsResultsDetails> responseDetails = response.getBody().getData().getElements().get(0).getResults().get(0).getDetails();

if (responseDetails.size()>0){

resMap.put("state","block");

StringBuilder error = new StringBuilder("检测到:");

for (ScanTextResponseBody.ScanTextResponseBodyDataElementsResultsDetails detail : responseDetails) {

if ("abuse".equals(detail.getLabel())) error.append("辱骂内容、");

if ("spam".equals(detail.getLabel())) error.append("垃圾内容、");

if ("politics".equals(detail.getLabel())) error.append("敏感内容、");

if ("terrorism".equals(detail.getLabel())) error.append("暴恐内容、");

if ("porn".equals(detail.getLabel())) error.append("黄色内容、");

if ("flood".equals(detail.getLabel())) error.append("灌水内容、");

if ("contraband".equals(detail.getLabel())) error.append("违禁内容、");

if ("ad".equals(detail.getLabel())) error.append("广告内容、");

}

resMap.put("msg",error.toString());

return JSON.toJSONString(resMap);

}else {

resMap.put("state","pass");

resMap.put("msg","未检测出违规!");

return JSON.toJSONString(resMap);

}

} catch (Exception _error) {

resMap.put("state","review");

resMap.put("msg","阿里云无法进行判断,需要人工进行审核,错误详情:"+_error);

return JSON.toJSONString(resMap);

}

}3.2 调用结果

- req

{

"text":"hello word! 卧槽6666"

}- res

{

"state": "block",

"msg": "检测到:辱骂内容、",

"data": {

"details": [{ "contexts": [{ "context": "卧槽" }], "label": "abuse" }],

"label": "abuse",

"rate": 99.91,

"suggestion": "block"

}

}4.图片审核

4.1 核心代码

private static final String accessKeyId = "<your-access-key-id>";

private static final String accessKeySecret = "<your-access-key-secret>";

@PostMapping("/scanImage")

public String scanImage(@RequestBody HashMap<String,String> reqMap) throws Exception {

// 获取待检测的文字

String image = reqMap.get("image");

System.out.println("image="+image);

// 返回结果的变量

Map<String,String> resMap = new HashMap<>();

//实例化客户端

Config config = new Config()

// 必填,您的 AccessKey ID

.setAccessKeyId(accessKeyId)

// 必填,您的 AccessKey Secret

.setAccessKeySecret(accessKeySecret);

config.endpoint = "imageaudit.cn-shanghai.aliyuncs.com";

Client client = new Client(config);

// 设置待检测内容

ScanImageRequest.ScanImageRequestTask task0 = new ScanImageRequest.ScanImageRequestTask().setImageURL(image);

// 封装检测请求

/**

* porn:图片智能鉴黄

* terrorism:图片敏感内容识别、图片风险人物识别

* ad:图片垃圾广告识别

* live:图片不良场景识别

* logo:图片Logo识别

*/

ScanImageRequest scanImageRequest = new ScanImageRequest()

.setTask(java.util.Arrays.asList(

task0

))

.setScene(java.util.Arrays.asList(

"porn","terrorism","live"

));

RuntimeOptions runtime = new RuntimeOptions();

// 调用API获取检测结果

ScanImageResponse response = client.scanImageWithOptions(scanImageRequest, runtime);

resMap.put("data",JSON.toJSONString(response.getBody().getData().getResults().get(0)));

// 检测结果解析

try {

List<ScanImageResponseBody.ScanImageResponseBodyDataResultsSubResults> responseSubResults = response.getBody().getData().getResults().get(0).getSubResults();

for(ScanImageResponseBody.ScanImageResponseBodyDataResultsSubResults responseSubResult : responseSubResults){

if(responseSubResult.getSuggestion()!="pass"){

resMap.put("state",responseSubResult.getSuggestion());

String msg = "";

switch (responseSubResult.getLabel()){

case "porn":

msg = "图片智能鉴黄未通过";

break;

case "terrorism":

msg = "图片敏感内容识别、图片风险人物识别未通过";

break;

case "ad":

msg = "图片垃圾广告识别未通过";

break;

case "live":

msg = "图片不良场景识别未通过";

break;

case "logo":

msg = "图片Logo识别未通过";

break;

}

return JSON.toJSONString(resMap);

}

}

} catch (Exception error) {

resMap.put("state","review");

resMap.put("msg","发生错误,详情:"+error);

return JSON.toJSONString(resMap);

}

resMap.put("state","pass");

return JSON.toJSONString(resMap);

}4.2 调用结果

- req

{

"image":"https://jupite-aliyun.oss-cn-hangzhou.aliyuncs.com/second_hand_shop/client/img/goodImgs/1683068284289.jpg"

}- res

{

"data": {

"imageURL": "http://jupite-aliyun.oss-cn-hangzhou.aliyuncs.com/second_hand_shop/client/img/goodImgs/1683068284289.jpg",

"subResults": [

{

"label": "normal",

"rate": 99.9,

"scene": "porn",

"suggestion": "pass"

},

{

"label": "normal",

"rate": 99.88,

"scene": "terrorism",

"suggestion": "pass"

},

{

"label": "normal",

"rate": 99.91,

"scene": "live",

"suggestion": "pass"

}

]

},

"state": "pass"

}

评论 (0)